...so I have been procrastinating on completing this challenge for some weeks since I started it and now it's done, phew!! :)

I came across this challenge by Forrest Brazeal while going through I think a course from GPS and it caught my attention. In this post I’ll be sharing my experience attempting the Cloud Resume Challenge in AWS

I started this challenge a while before I got my first role as a DevOps engineer recently and have had little time to complete it and prior to this time, I had been in IT support (Help Desk) and needed to gain as much practical cloud experience as I could.

The challenge is broken down into 6 chunks and requires that we make a simple resume site (front end) with a website visit counter (backend) all built on AWS infrastructure. To reduce manual work (of course, I'll keep updating my resume as I grow in my career), we are also required to automate as much of our implementation as a best practice approach using infrastructure-as-code and CI/CD workflows for seamless updates.

Let's dive in...

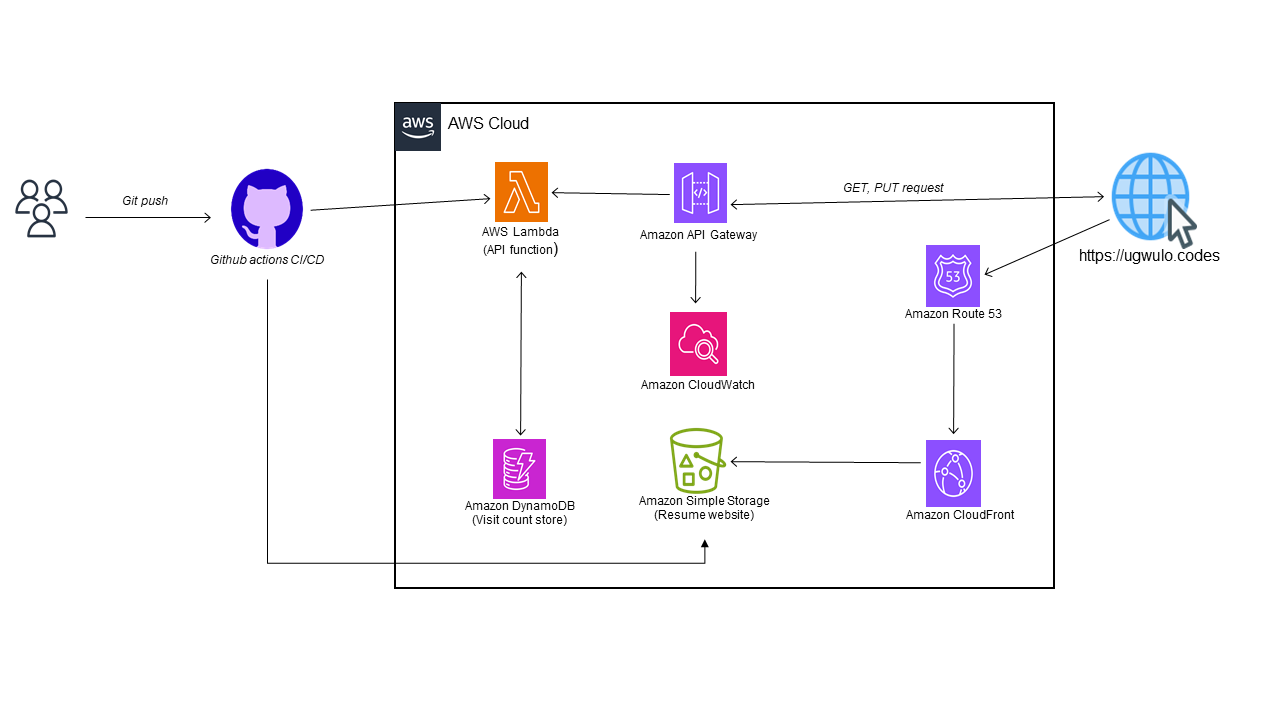

Architecture Overview

For my drawing, I used assets from: AWS Architecture Icons and icons8.com

Chunk 1: Building the front end

This chunk involved building the front end component using HTML and CSS and hosting the static site as an Amazon S3 static website. I am not really good with front end development so I used a template by CeeVee and made the necessary changes to suit my taste and need. We are required to also point our custom domain to Cloudfront, this helps to cache requests at edge locations and reduce latency. A custom domain gives the site a professional appeal instead of having a URL like "my-c00l-resume-website.com" you can have something like mine: ugwulo.codes which is secure too. You can register a domain name from Amazon Route53, for me, I used my GitHub student pack and got one from Name.com and secured it using AWS Certificate manager. I also played around with routing my custom email traffic via Route53 by setting the appropriate records; TXT or SPF record for spam and phishing prevention, MX records for specifying email server in my case Titan email from Name.com.

Chunk 2: Building the API

My resume site would have a counter feature that records each visit to my site and store the counts in a database. It is not recommended to directly interact with a database from the front end code and this is where the API comes in. I used AWS API Gateway (REST API) with AWS Lambda written in Python for my implementation as this is serverless and saves a lot of cost due to it's ability to scale to zero. I explored a microservice function approach having a separate GET function for my counter retrieval and a PUT function to record new counts in DynamoDB. It was interesting to learn about partition keys in DynamoDB which is a NoSQL DB and how it scales horizontally as opposed to traditional SQL DBs.

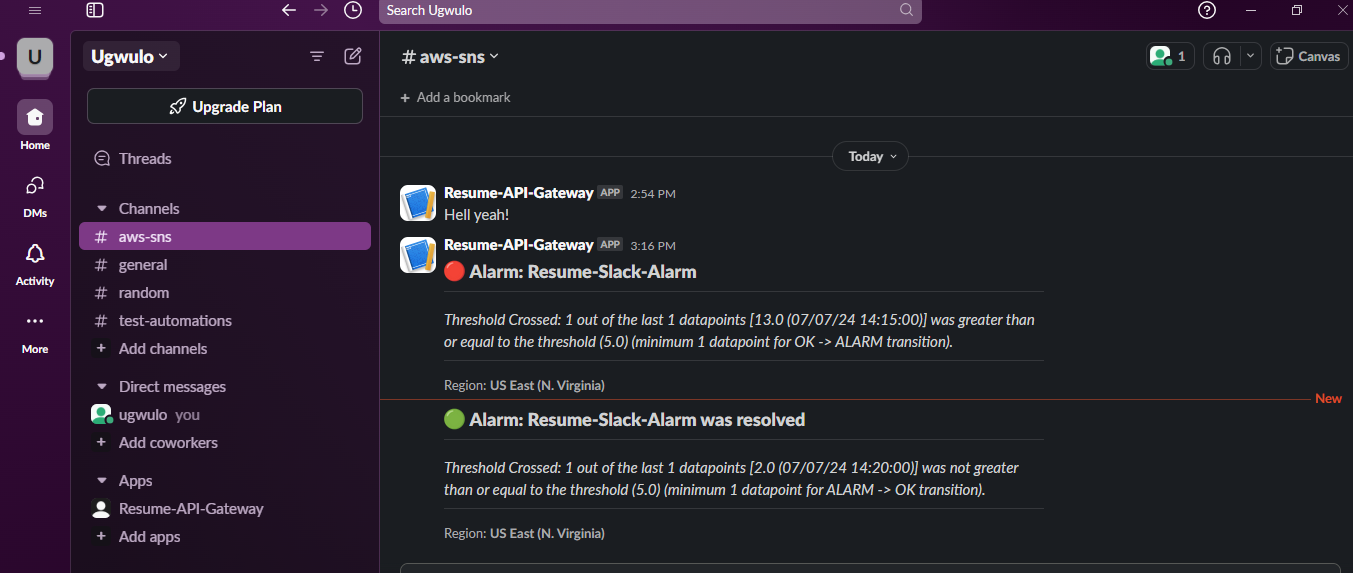

For monitoring, I setup Cloudwatch alarm to monitor when requests to my API Gateway exceed a certain threshold which sends the alarm state to SNS and SNS publishes a topic to trigger another Lambda function to push events through Slack webhook to my channel. I also added an Email subscription.

Chunk 3: Front-end / back-end integration

So far, each component works as expected, but we need to integrate all parts (frontend, API, DB, etc) to work as one unit. I had to add a little JavaScript in my frontend to consume the resume API so as to retrieve and save count values from DynamoDB. For security, I added API authentication in place of AWS WAF (due to cost) to prevent public access to the API endpoint, for this I used AWS API Key with Usage plans.

I ran into issues with Cross-Origin Resource Sharing (CORS), so I figured I had to edit the OPTIONS method in my API gateway then add Header mappings for the Integration response to allow any origin. I also had a hard time figuring out how to pass a query string parameter from a client(browser) to the backend(lambda) function through API Gateway (this was before I separated my lambda into GET and PUT distinct functions) and later found out to set Integration Type to Lambda and set Lambda Proxy Integration to True, from the GET Method Integration Request settings.

Chunk 4: Automation / CI

It's time to automate our work, like I said earlier on, I would keep updating my resume as I grow in my career and as a good practice, we need to automate repeatable actions using infrastructure-as-code, this helps in disaster recovery, documentation/versioning, reduce human error and improve Lead Time for Changes as a core DORA metric.

I used the AWS Serverless Application Model (SAM) which is an extension of AWS CloudFormation to deploy my S3, Lambda, API Gateway and DynamoDB table.

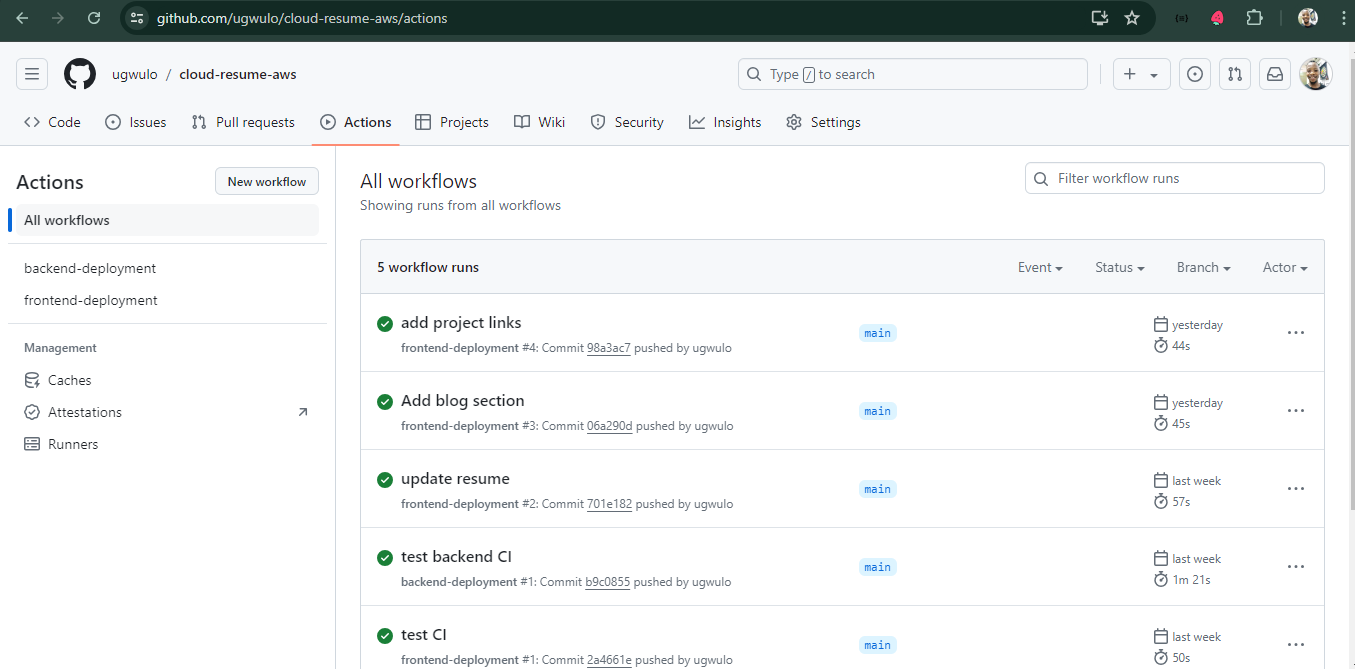

For my continuous integration, I used GitHub Actions with a trunk-based approach to automate my front end builds and Cloudfront invalidations, also deploying a new SAM stack to update my backend when pushes are made. I had a hard time trying to create conditions to trigger my workflow when commits are made to specific directory paths in my GitHub branch due to how my project tree is structured. I later had to create two separate workflows one for backend and another for front end triggers.

Chunk 0: Certification prep

I decided to save this for last, the challenge required that we at least get an AWS practitioner certification before proceeding with the challenge. For me, I have been fellowshipping at a couple of cloud trainings and have had the opportunity to take my AWS CCP, SAA and DVA certifications, they didn't come easy, as I had to put in a lot of work in practice, reading AWS documentations, peer learning and practice questions to ace them on first attempts. Even though certifications don't equate experience, I have come to realize that they help one to understand certain concepts in-depth while pushing you beyond your limits to learn stuff you would normally shove under the carpet. Below are my AWS certification :)

AWS Certified Solutions Architect – Associate |

AWS Certified Cloud Practitioner |

AWS Certified Developer – Associate |

Thank you for reading this far and if you would like to see the final result, please see my resume page here. My code can also be found in GitHub . Please feel free to like and drop a comment below.